How Discord stores Trillions of Messages

Discord's Journey from Billions to Trillions: A Deep Dive into Message Storage Evolution

Introduction

Imagine being responsible for storing trillions of messages that millions of users depend on every day. This isn't just a hypothetical scenario – it's the reality that Discord's engineering team faced as they scaled their platform from billions to trillions of messages. In this comprehensive case study, we'll explore Discord's fascinating journey of database migration, architectural decisions, and the intricate details of how they tackled one of the most challenging scaling problems in modern tech.

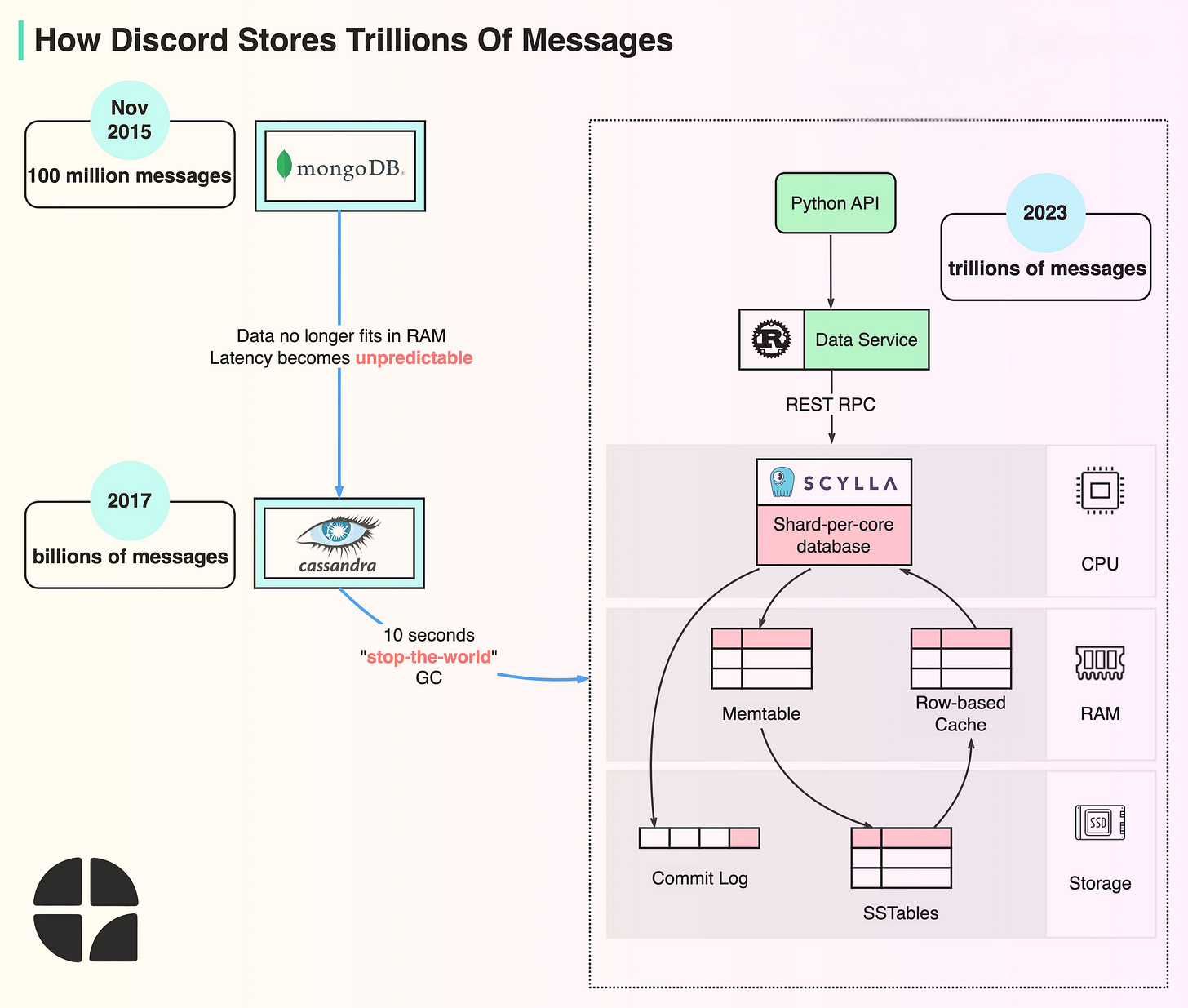

The Initial Architecture: MongoDB to Cassandra

Early Days with MongoDB

When Discord first launched, like many startups, they chose MongoDB as their initial database solution. MongoDB offered:

Flexible document schema

Horizontal scalability

Familiar JSON-like data structure

Strong community support

However, as Discord's user base grew, limitations became apparent:

Scaling challenges with large datasets

Complex maintenance requirements

Performance inconsistencies at scale

The First Migration: Cassandra

In 2017, Discord's engineering team made their first major database transition from MongoDB to Cassandra. The decision was driven by the need for a scalable, fault-tolerant system that could handle their growing user base. At the time, this seemed like the perfect solution – Cassandra offered horizontal scalability, high availability, and promised to be relatively low maintenance.

The Cassandra Era: Challenges at Scale

Infrastructure Growth

By 2022, Discord's growth had pushed their Cassandra cluster to its limits:

177 nodes (from initial 12)

Trillions of messages

Multiple petabytes of data

High operational overhead

Frequent on-call incidents

Unpredictable latency spikes

Critical Issues Emerged

Message Schema Analysis:

1CREATE TABLE messages (

2 channel_id bigint,

3 bucket int,

4 message_id bigint,

5 content text,

6 PRIMARY KEY ((channel_id, bucket), message_id)

7);This schema design revealed several critical insights:

Messages were partitioned by channel_id and time-based buckets

Snowflake IDs enabled chronological sorting

The partition strategy led to uneven data distribution

The Hidden Complexity:

The real challenge wasn't just in the schema – it was in the access patterns. Discord servers range from small friend groups to communities with hundreds of thousands of members. This diversity created what the team called "hot partitions" – scenarios where specific channels received disproportionate amounts of traffic.

Technical Deep Dive:

The Hot Partition Problem When a popular channel experienced high traffic:

Reads would overwhelm the nodes containing that partition

Latency would increase across the entire cluster

Quorum consistency requirements meant slower response times

Maintenance operations like compaction became increasingly difficult

The Breaking Point:

The engineering team found themselves performing what they dubbed the "gossip dance" – a complex choreography of:

Taking nodes out of rotation

Allowing compaction to catch up

Reintegrating nodes

Managing hinted handoffs

Repeating the process

2. JVM Tuning Nightmare:

A significant portion of operational overhead came from JVM management:

Garbage collection pauses caused latency spikes

Heap size optimization became increasingly complex

GC tuning required specialized expertise

The Solution: Multi-Faceted Approach

1. Database Migration to ScyllaDB

Discord chose ScyllaDB for several key reasons:

C++ implementation eliminated GC concerns

Shard-per-core architecture improved resource utilization

Better workload isolation capabilities

Improved reverse query performance

Compatible with existing Cassandra tooling

2. Migration Strategy

Initial Plan:

Dual-write to both databases

Cutover new data to ScyllaDB

Migrate historical data in the background

Reality Check: The initial migration estimate using ScyllaDB's Spark migrator was three months – far too long given the operational issues they were facing.

The Rust Revolution:

In a classic example of engineering ingenuity, the team decided to leverage their expertise in Rust to build a custom migration tool. The results were stunning:

Migration time reduced from 3 months to 9 days

Throughput of 3.2 million messages per second

Built-in check pointing using SQLite

Automated validation through dual-reads

2. Introduction of Data Services Layer

One of the most innovative aspects of Discord's solution was the introduction of data services – intermediary layers between their API monolith and database clusters.

Key Features:

Request Coalescing:

async fn get_message(channel_id: u64, message_id: u64) -> Result<Message> {

let task_key = format!("{}:{}", channel_id, message_id);

if let Some(existing_task) = TASK_MAP.get(&task_key) {

return existing_task.subscribe().await;

}

let new_task = Task::new(async move {

// Actual database query

db.get_message(channel_id, message_id).await

});

TASK_MAP.insert(task_key.clone(), new_task.clone());

new_task.execute().await

}Consistent Hash-based Routing:

Requests for the same channel routed to the same service instance

Improved coalescing efficiency

Better resource utilization

Performance Improvements:

The new architecture delivered impressive results:

Historical message fetch latency: 40-125ms → 15ms (p99)

Message insert latency: 5-70ms → steady 5ms (p99)

Node count reduced: 177 → 72

Storage efficiency: 4TB → 9TB per node

Results and Impact

Performance Improvements

Reduced node count: 177 → 72

Storage efficiency: 9TB per node (up from 4TB)

Latency improvements:

Historical message fetching: 40-125ms → 15ms (p99)

Message insertion: 5-70ms → 5ms (p99)

Real-world Stress Test: World Cup Final

The World Cup Final The true test of the new system came during the 2022 World Cup Final. The match between Argentina and France created distinct traffic patterns that demonstrated the system's resilience:

Traffic Spikes Analysis:

Initial Goal (Messi penalty): 2.1x normal traffic

Double Goal Sequence: 2.8x normal traffic

Mbappe's Quick Double: 3.5x normal traffic

Penalty Shootout: 4.2x normal traffic

The system handled these spikes without any degradation in performance, proving the effectiveness of the new architecture.

Operational Benefits

Reduced on-call incidents

Simplified maintenance procedures

Better resource utilization

Enhanced system predictability

Technical Deep Dive: Architecture Components

1. Storage Layer Design

Client Request

↓

Load Balancer

↓

API Gateway

↓

Data Service (Rust)

├── Request Coalescing

├── Consistent Hashing

└── Traffic Shaping

↓

ScyllaDB Cluster

├── Shard-per-core

├── Local SSDs

└── RAID Configuration2. Data Service Implementation

Key components:

// Simplified routing implementation

struct Router {

ring: ConsistentHashRing,

services: Vec<ServiceEndpoint>,

}

impl Router {

fn route_request(&self, channel_id: u64) -> &ServiceEndpoint {

let hash = self.ring.get_node(channel_id);

&self.services[hash]

}

}3. Monitoring and Observability

Implemented metrics:

Request coalescing efficiency

Per-channel throughput

Latency distributions

Storage utilization

Hot partition detection

Lessons Learned

Database Selection:

Consider operational complexity, not just features

Factor in team expertise and maintenance overhead

Test real-world access patterns, not just benchmarks

Architecture Design:

Implement request coalescing early

Use consistent hashing for better resource utilization

Build observability into the system from day one

Migration Strategy:

Don't be afraid to build custom tools

Validate data continuously during migration

Plan for the unexpected (like those pesky tombstones)

Technology Choices:

Rust proved invaluable for systems programming

ScyllaDB's C++ implementation eliminated GC concerns

Local SSDs with RAID mirroring provided optimal performance

Future Considerations

Scaling Beyond Trillions

Investigating new storage technologies

Exploring message archival strategies

Evaluating compression improvements

Feature Enhancement

Enhanced search capabilities

Improved analytics support

Better data locality

Operational Improvements

Automated scaling

Enhanced monitoring

Predictive maintenance

Conclusion

Discord's journey from billions to trillions of messages showcases the importance of pragmatic engineering decisions, the value of custom tooling, and the benefits of choosing the right technology stack. Their success demonstrates that with careful planning, innovative solutions, and a willingness to challenge conventional approaches, even the most daunting scaling challenges can be overcome.

Are you working on similar scaling challenges? Consider:

Evaluating your current architecture

Implementing request coalescing

Exploring modern database solutions

Contributing to open-source tools

Are you facing similar scaling challenges? We'd love to hear about your experiences and solutions. Share your thoughts in the comments below.

References:

scylladb.com/product/technology/shard-per-core-architecture/

discord.com/blog/how-discord-stores-trillions-of-messages

Disclaimer: The details in this post have been derived from the Discord Engineering Blog. All credit for the technical details goes to the Discord engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.